Moonshots with

slingshots

Epic stories about how:

- I built and sold the most epic companies without going insane 🤯

- I helped a whole country fight COVID-19 🦠 with Machine Learning

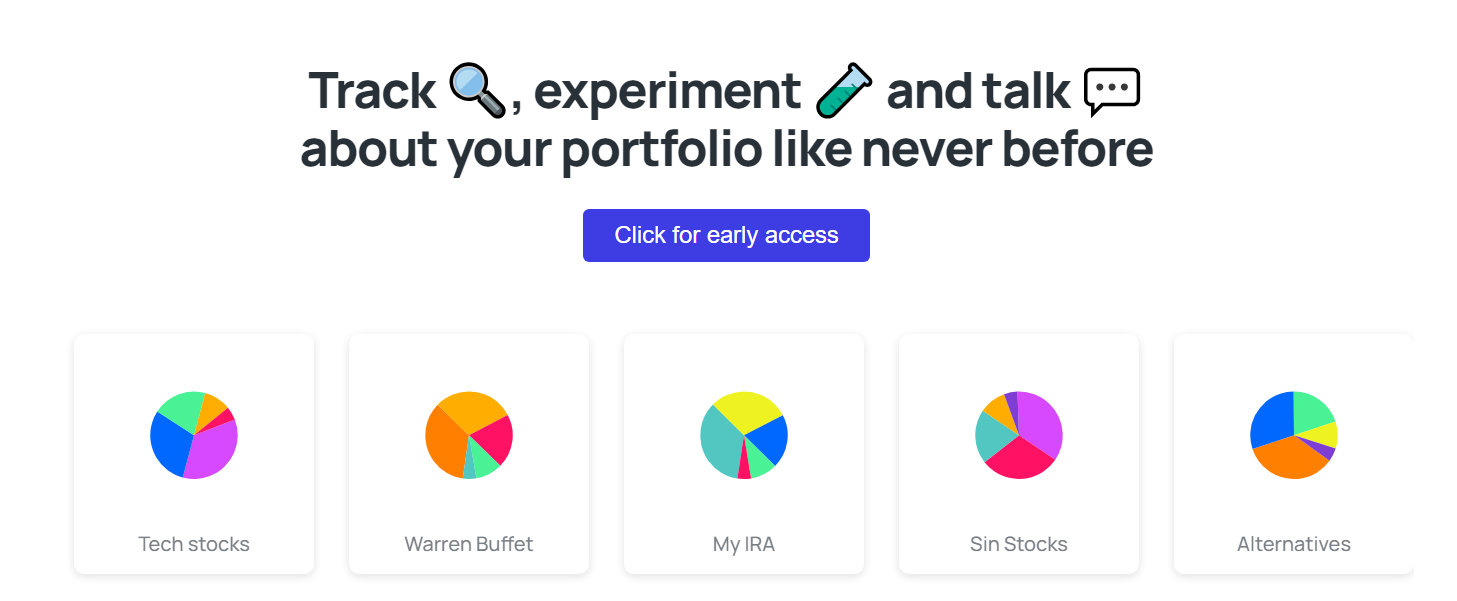

- I created a wealth management company from scratch and kicked Wall Street's ass

- I ran the first arbitrage algorithms on blockchain, made and lost everything (and then made them back) [...]

- Invested in a unicorn🦄 by being annoying